API Tests - What's in a Name?

In 2016, I gave a talk at ExpoQA conference in Madrid. The talk was called Need for Speed[1]. In that talk, I omitted an important piece of information which I thought it was obvious to everyone. In hindsight however, it looks like it was obvious only to me.

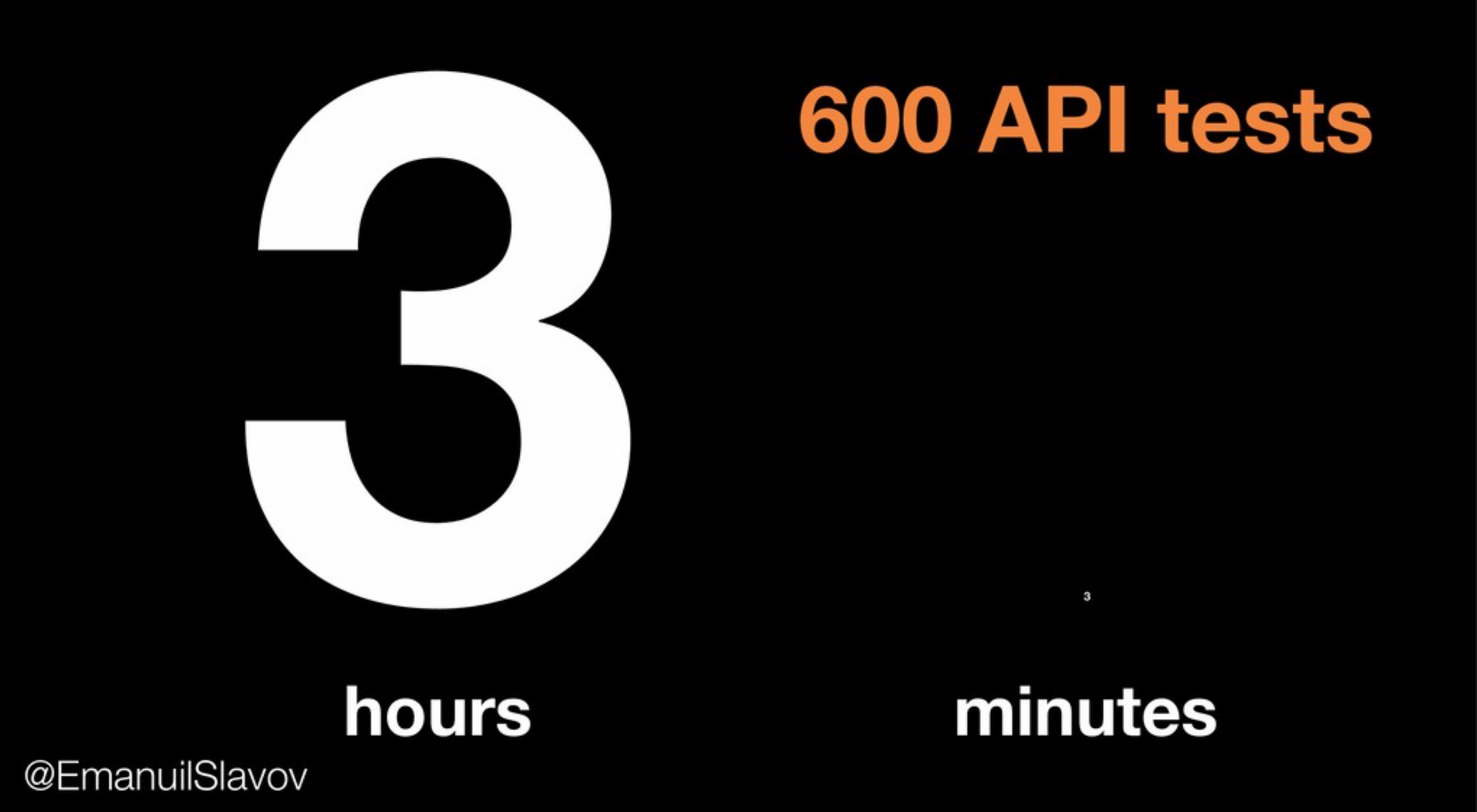

One of the slides in this talk is about how we decreased the execution time of 600 API tests from 3 hours to (currently less than) 3 minutes:

After the ExpoQA conference I was reviewing the notifications in Twitter and saw someone live tweeting at the same time as this slide was up. The text was something along the lines of: “Wow, Emanuil, what king of slow system are you working on, so that it takes 3 hours to execute 600 API tests?”. I did’t gave it much thought back then, but in the next years, whenever I give a talk I started clarifying what I mean by API tests[2].

Currently, most people understand “API test” as a single request to a web based API (REST or SOAP) and then an evaluation of the response. Such a test can be performed by tools like Postman, SOAPUI or similar.

What I mean by an “API test” is something different. It is a high level test[3] which consists of more than one request to an API endpoint. In fact, in our current tests, a single “API test”, on average, makes between 15 and 20 requests to the backend. Such test performs the following operations:

- Initial test data generation and seeding it into the system

- Login/obtaining authentication token

- One or more actions

- Request(s) to assert the result of the actions

- Subsequent removal of the test data from the system

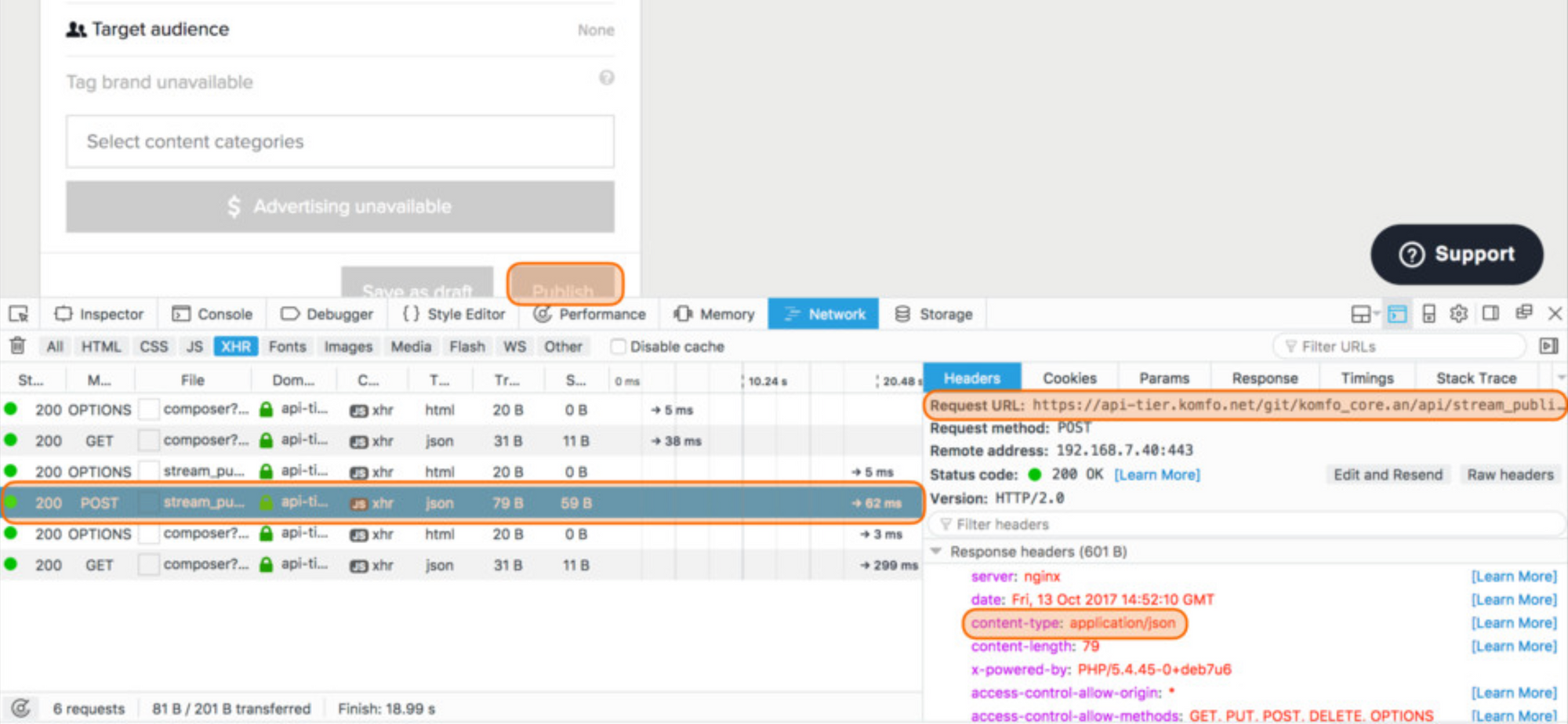

You may think that this test resembles more or less an end-to-end test and you may be right. Those API tests are using the same requests as the ones that a web based UI (or mobile app) will make to the backend.

So these tests are essentially the same as the automated UI tests[4], but they are skipping the unstable UI part.

The main advantage to such tests is that while they still exercise the backend logic of an application they are orders of magnitude faster and way more stable. The drawback is that they do not test the frontend. However the frontend can be tested separately from the backend by mocking the real backend.

Those frontend tests can be performed with the real UI assembled - e.g. for functionality that does DOM manipulation, or they can test functions only - that do not interact with the UI. In case of the former tests, the mocking of the backend, can be done by a wide variety of tools, including one that I’ve developed for similar purpose - Nagual.

I think having separate API tests and dedicated UI tests with mocked backend is superior approach in the modern applications than having only full UI based end-to-end tests. Why do people continue to write only the later? For a bunch of reasons, the main being:

- They are used to work that way. A while ago[5], all the HTML + all the data, was constructed in the backend, and was sent to the frontend only to be visualized. They only way to interact with the backend of an app was though the UI interface of a browser (or a desktop application). But apps have evolved. Currently the backend sends only data in JSON or XML format and it’s up to the frontend to construct the HTML and bind the data to it. We have a shorter path to reach backend[6] — the web services.

- In the old days there were lots of differences between the web browsers. Even basic HTML could be rendered differently. Back then it made sense to use automation to run the same test on different browsers. Nowadays, the differences are negligible and they don’t matter for most of the apps[7].

- The management loves to watch a running Selenium UI test — it feels like magic. Clicking, selection from drop down menus, entering text, navigation — all without human interaction. The work that a Selenium UI tests performs is highly visible. A web service test run is invisible save for occasional data dump in the console and the end status of the test.

- “The only way to test a system is the same way an end used would use the it.” Not true, as we can decompose the user actions to smaller, more manageable chunks of work. API and unit tests are enough. Occasionally we’d still need some high level Selenium UI tests, but those will be mostly to verify that the all the moving parts of an app are wired correctly — e.g. the fronted talks to the right endpoint.

- There is large industry focused solely in UI automation. It constantly boast that the only way to do test automation is via the UI. From commercial tools instead of open source Selenium. To on demand cloud environments where you can rent a dazzling array of browser + operating system or mobile OS environment. And to top it all — a wide range of UI automation test consultants offering their services.

- Most of the people who write UI automated tests are either not developers or are very disconnected with the developers. As the developers are usually writing JS unit tests only, they know that there is not much sense in testing the same functionality with the slower end-to-end UI tests. However some companies have QA engineers that write only end-to-end UI tests. They may not work with the same cadence as the developers. Or the QA team is totally separate from the development team - e.g. by outsourced QA department. In this case one of the easiest things to measure[8] is how many tests cases are created (raw count).

1. Later that year I was invited by Google to present it at GTAC

2. And for that matter, all test related terminology - e.g. ‘unit tests’ means different things to different companies.

3. It means that the backend system (or large parts of it) is fully deployed. There is real database and network connections between the different components. The connections to the 3rd party services are either established or stubbed at a higher level.

4. Usually automated with a tool like Selenium.

5. It depends in which industry but for most ‘a while ago’ is 7-10 years.

6. This is where the majority of the business logic resides.

7. The most notable exclusion may be e-commerce apps, where a few pixels or different color can make a big difference.

8. But also the most inappropriate as this is purely a vanity metric.