Complexity Can Kill You (Literally)

During the past months I’ve been presenting at various software testing conferences (ISTA, STAREAST, NordicTestingDays) on how to write reliable automated tests for legacy applications. In order for this effort to be successful one need to get to know the whole system well (and apply systems thinking), to not be confined in one’s own area of expertise.

“The key is to practice continual improvement and think of manufacturing as a system, not as bits and pieces.” W. Edwards Deming

Some of the topics in this presentation were considered by QA Engineers as “for developers only” which is wrong.

One such topic is code complexity (a.k.a Cyclomatic complexity). This is really important stuff, but it’s not popular in testing conferences. I talk about it in two slides, but it really deserves more than that. So this is the long version.

What is Code Complexity

The simplest definition is that code complexity is the number of unique paths through a given method. If a method does not have any conditional statements, then its complexity is 1 (only one code path):

function testPrint() {

echo('Hello World');

}

If a method has one conditional statement then its complexity is 2 (two code paths — when one of the conditions is satisfied and when one when the conditions is not satisfied):

function testPrint($parameter) {

if($parameter) {

echo('Hello World');

}

}

There is another, similar, metric called Weighted Methods Per Class, but in this blog post, I’m focusing only on the method complexity.

The Magical Number 10

It’s been accepted that code complexity should not exceed 10 for a given method. This is also the default threshold in all static code analyzers.

So why 10?

First of all, based on statistical data performed on actual programs (see the link above). The more complex the method — the more opportunities to introduce a bug.

Second, in order to fully cover a method with unit tests, you need to write as many tests as is the method complexity. The more complex the method, the more tests are need to cover every condition. Also the more complex the method, the longer it is. It does more than one thing. It has way too much reasons to change. You scroll back and forth, you using debugger because of too many variables, etc. Complexity is well… a complex thing.

Third, the average human can just keep track of limited amount of objects in different state. It’s called 7 ± 2 rule. Take the best case - 9 (+ 1 for being a developer). Just like a juggler, you can keep a limited amount of balls in the air.

It’s really, really important to keep complexity 10 or less.

Why complexity can kill you

You probably know about the problem Toyota had with so called ‘Unintended Acceleration’. At the end it costed $1,2B to settle and resulted in at least 12 fatalities. As part of the lawsuits that followed, a thorough analysis of the firmware source code was performed. It lasted for more than 18 months.

While Toyota is known for excellent hardware manufacturing process, the same cannot be said for the software (It’s not clear though, if this code was created by contractor. However Toyota is also known to keep contractors to the same high quality standards).

The analysts found that the function responsible for the throttle angle had complexity of over 100. While this complex function is not proved to be the cause of the accidents, its code quality is certainly far from any decent code standards. A bug hiding in this function certainly can cause unintended acceleration. The way the current Toyota firmware is designed, this cannot easily be tracked, though. And this is just the tip of the iceberg, read the whole document from the above link to get an idea of the bad development practices that were used.

One potential explanation may be that building something that you can touch is regarded highly in Japan. The same can not be said if make imaginary stuff like software. Whatever the reason is, I’d really like to see Toyota getting their embedded software act together as I’m currently driving one.

Death by a thousand paper cuts

Methods do not grow overnight to become unmanageable monsters - 1000+ lines long, 100+ complexity. It happens slowly and unnoticeably. It’s how technical debt gets accumulated. Here some of the reasons why:

-

Developers are in a hurry to push a feature. They want to come back, and do the right thing later, but management would not give them time (it has no immediate business value).

-

Methods mixing different levels of abstraction. For example has both domain business logic and parses XML.

-

There is too much error handling, the codebase is not using exceptions to signal a failure.

-

The codebase is old, badly structured, full of broken windows. “I’ll just add one more if statement. What bad can happen?”

-

Developers are not educated about the implications of high code complexity.

Remember this scene from Star Wars. Our heroes are about to be squashed by the wall of the trash compactor in the Death Star. This is how you feel like working with codebase with high complexity methods. With each day passing, the situation gradually becomes worse. Project will grind to a hold if you don’t take drastic measure.

How to fix the mess

A ratchet a device that can rotate only in one direction. This is what you need so that your codebase does not get in worse shape than yesterday. One turn at a time, slowly tidying the mess. No loosing up, only getting better with time.

To give credit where credit is due, this technique was described a while ago by Chris Stevenson (currently his page is not active).

You start by assessing your current situation. What is the highest complexity count in your methods?

Let’s say you have one method with complexity 50. You setup your CI job to fail the build if any method reaches complexity of 51 or over. When you get a chance, you refactor to lower the complexity of this method to be 45, by extracting part of the code in another method (A method with 50 complexity does too much stuff anyway. It should be easy to find natural boundary to extract small code snippet that does one and only one thing). Now you lower your complexity threshold for failing the build to 45 (the ratchet turns one teeth). Rinse and repeat. Make small steps. Sometimes you’ll lower the complexity by 1 at a time across all the codebase, but that’s OK.

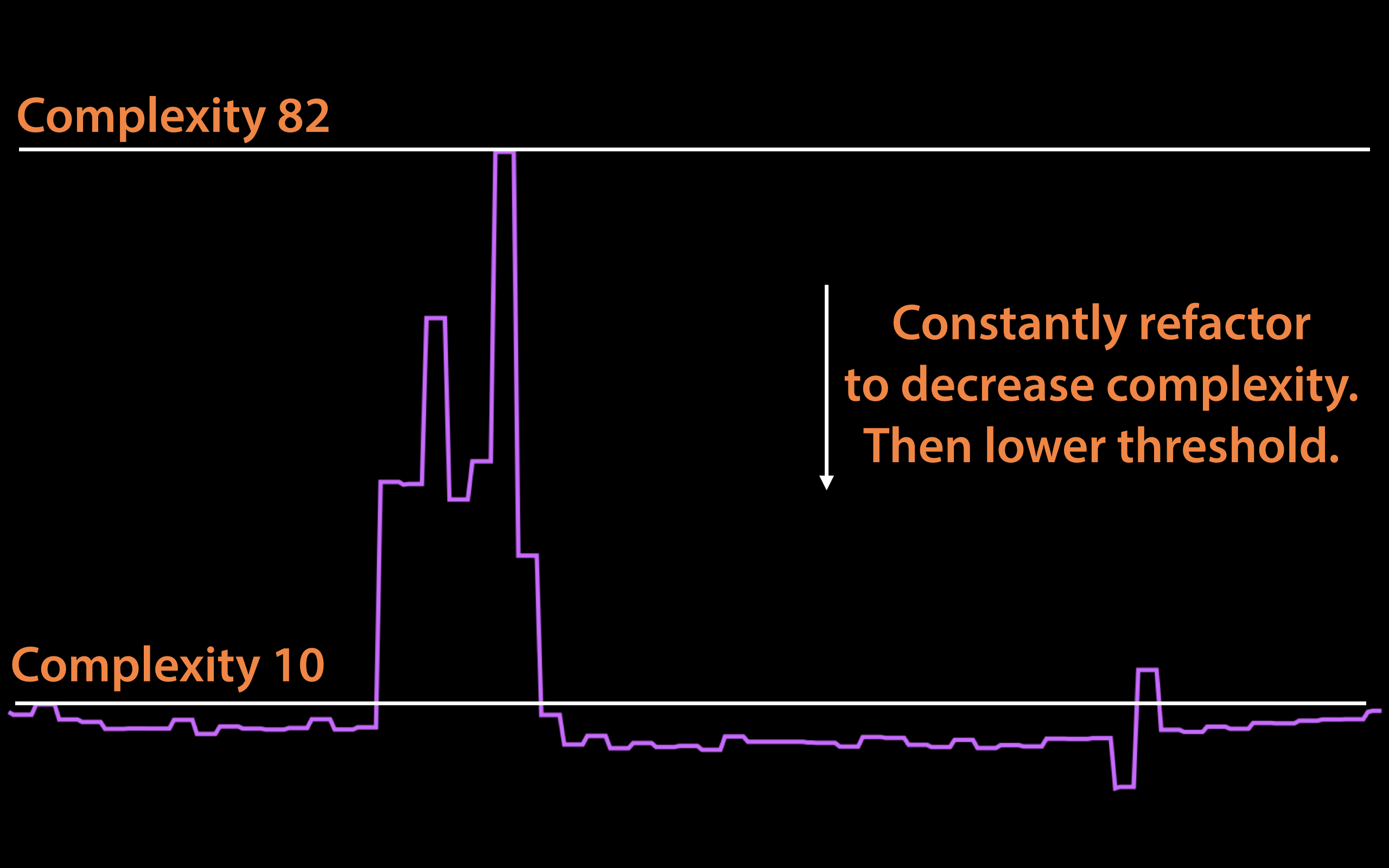

Here is how a histogram of codebase may look like:

Тhe lower you reach, the more methods you’ll need to refactor to establish the next threshold.

Build Quality In

If you setup the build to fail every time you push code with high complexity, you’re building quality in. This is also one of the 14 management principles defined by the father of Japan’s industrial rebirth after the second world war - W. Edwards Deming:

“Cease dependence on inspection to achieve quality. Eliminate the need for inspection on a mass basis by building quality into the product in the first place.”

Not surprisingly, the same principle is one of the two pillars in the Toyota Production System, called 'jidoka'. Looking through software development lenses it means stop the system (build and deployment is not possible) immediately when anomaly is detected (high complexity code being pushed). A human needs to make immediate corrective actions (refactor the code, extract a method). Then investigate the root cause (in this case it’s clear — high complexity). This is also true for all the three pillars of automated testing, all the checks running on every code push.

Do not rely on “refactoring” days, hardening springs, stabilization phases. This is how you build quality in and give immediate feedback to the developers.

Is 10 universal threshold?

Of course not, but it’s a good default. One place where it can differ is when you work with dynamically typed languages. You can try to perform any operation on any type, no compiler would stop you. However you run considerable risk of failing the operation at runtime, if the type is not correct. Those languages give you lots of flexibility but are real pain in the ass to write quality code if you don’t know what you’re doing. The irony is that those languages are really easy to start with, learning, but it takes an expert to produce maintainable code.

Here is simple PHP example:

function normalizeCreatedTime($created_time) {

$item = array();

// an object

if(is_object($created_time)) {

$item['created_time'] = $created_time->value;

}

// array

elseif(is_array($created_time)) {

$item['created_time'] = $created_time['value'];

}

// int or string

else {

$item['created_time'] = $created_time;

}

return $item;

}

In this case, we do not know the type of variable ahead of time, so we need to do some runtime checks. The complexity of this method is 4. Notice how different it is when you’re using statically typed language. You’ll know the type of the variable ahead of time, so no need to do any runtime type checks (also the compiler will complain). The complexity in this case is 1.

Then it was originally defined, cyclomatic complexity threshold of 10 was sufficient for compiled, statically typed languages. It is still valid for those. However, if you’re using dynamically typed, interpreted languages, you can relax the threshold to 15.

Final considerations

For best results combine code complexity threshold with method lines threshold. A method should be small enough to fit on one screen. Depending on the monitor and the font you use, this would be no more than 50-100 lines.

As with every metric, pay attention how you're using it. Explain to the developers why it exists in the first place — to make their life easier, to stop the codebase from rotting at time goes by, to prevent potential bugs, simple codebase to maintain, easy to debug, less refactoring and less unit tests per method. Developers need to understand that there are long term benefits behind the numbers. Do not abuse this threshold to punish or incentives developers. They are smart and will find a way to game the system.